Network configuration for Conveyor

AWS

VPC Setup

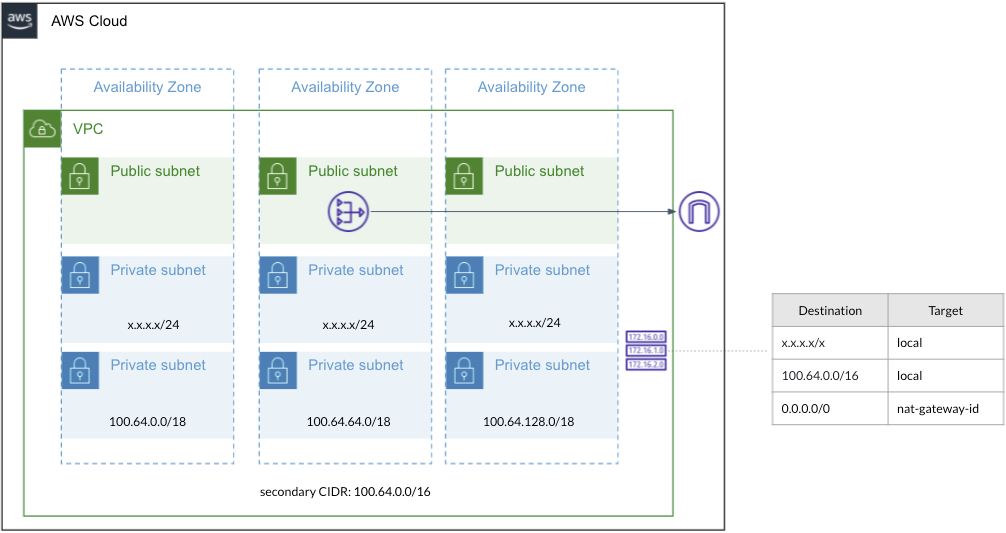

The image below describes the minimal VPC setup to support a cluster.

VPC setup

Associate the secondary CIDR 100.64.0.0/16 with your VPC. This is part of a private network CIDR that should only be used as a NAT, and thus should not be connection to the rest of your network setup.

Public subnets

Setup 3 public subnets in 3 availability zones. The CIDR size for these subnets is not important. In at least one of those a NAT gateway should be available that can route traffic to the internet. To increase availability and limit cross-region traffic, you can add NAT gateways to the other public subnets.

Private subnets

Setup 3 private subnets of at least the /24 size in the primary VPC CIDR range. These subnets will be used to host the nodes of the Kubernetes cluster. Update the route table to allow internet access through the NAT gateway.

Setup 3 private subnet using /18 size of the secondary CIDR (100.64.0.0/18, 100.64.64.0/18, 100.64.128.0/18). These subnets will be used to host the k8s pods. These should have no connection to the internet via the route table, they should only be routed internally.

Additional information:

- https://aws.amazon.com/premiumsupport/knowledge-center/eks-multiple-cidr-ranges/

- https://aws.amazon.com/about-aws/whats-new/2018/10/amazon-eks-now-supports-additional-vpc-cidr-blocks/

- https://www.eksworkshop.com/beginner/160_advanced-networking/secondary_cidr/

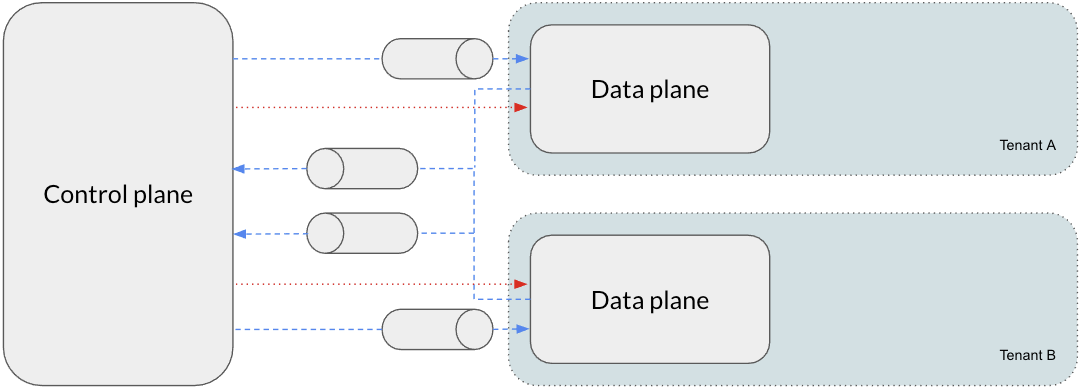

Data plane Connection

The control plane and data plane have synchronous and asynchronous bidirectional communication. The asynchronous communication leverages AWS SQS queues with a bidirectional trust relationship. For the synchronous communication, you can choose to either use an internet-based connection or use AWS PrivateLink.

Internet

The default way is that we expose a NLB in the data plane public subnets. All traffic from the control plane uses this endpoint to communicate to the corresponding cluster services.

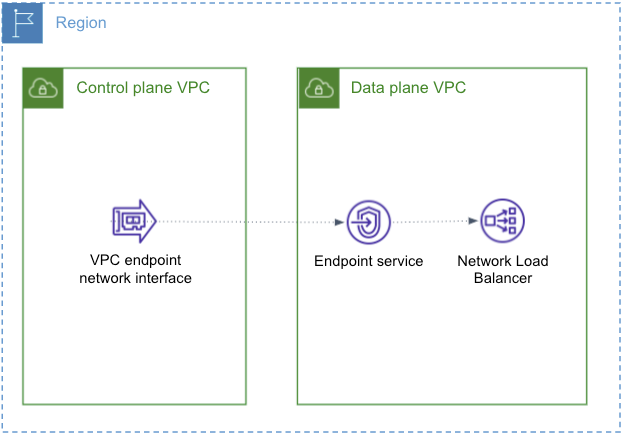

AWS PrivateLink

In some setup it might not be possible to have an internet facing service. In those cases, we will set up a VPC endpoint service in the data plane connected to a private network load balancer. This will allow the control plane to reach the data plane without exposing traffic to the public internet.

Azure

Virtual Network setup

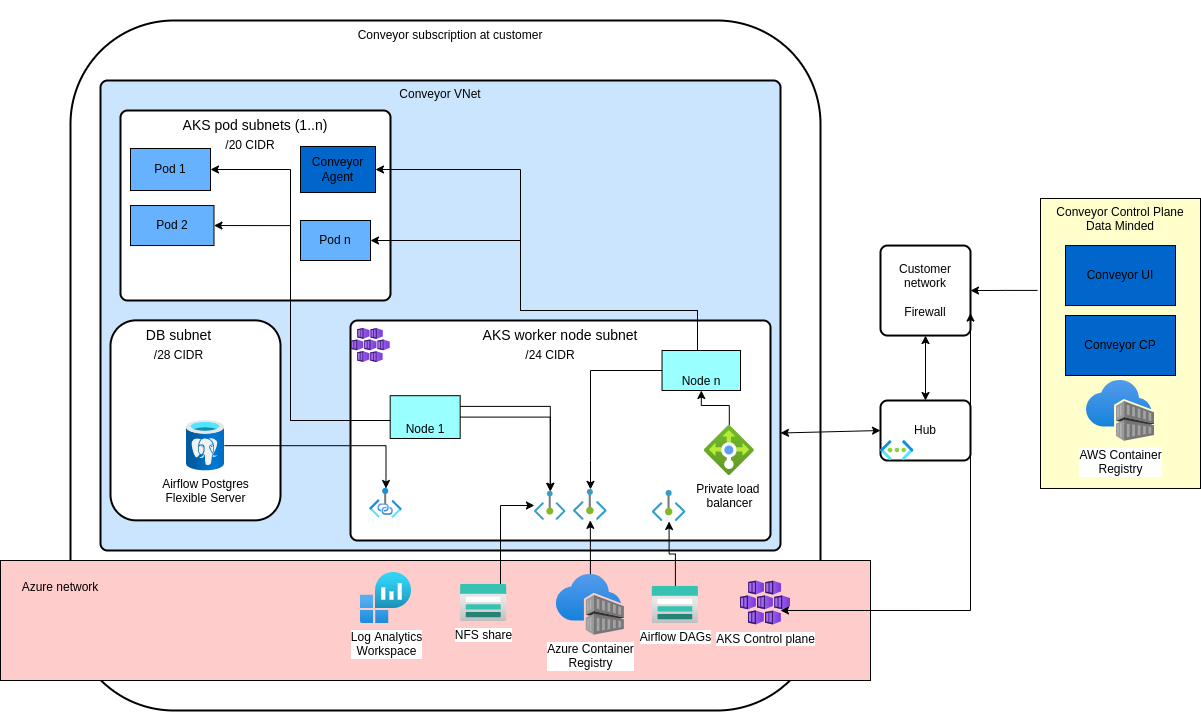

The image describes a secure network setup:

The image describes a setup where the customer manages the Virtual Networks and all network connectivity from the Virtual Networks is going through the customer firewall. In this case the customer needs to set up:

- A public IP which we can use to communicate from the control plane

- Set up the routing tables to allow for network connectivity. We do not create a public load-balancer for in-/outbound connectivity.

- Configure the firewall to support AKS

Alternatively, a more lightweight setup is that we create/manage the Virtual Networks ourselves and provision a public IP for the load-balancer of AKS for both inbound and outbound connectivity.

Azure Virtual Networks

- DB subnet: used to manage the postgres flexible server. This can be a small subnet of 10 IPs.

- AKS pod subnet: biggest subnet as this should be able to run all the pods required for your workloads. We typically recommend at least 2000 IPs.

- AKS node subnet: determines how many nodes you can maximally have for your cluster and thus depends on your workload. A good recommendation is 256 IPs.

Private endpoints

By default, we always use private endpoints to connect to Azure services from within the Virtual Network to ensure we do not go over the public internet. To set this up correctly, we create private DNS zones. If you have custom DNS servers, you should create conditional forwarding for these private zones yourself.

Data plane Connection

The control plane and data plane have synchronous and asynchronous bidirectional communication. The asynchronous communication leverages AWS SQS queues with a bidirectional trust relationship. For the synchronous communication, you need to set up a public IP in the AKS subnet (if you want the Virtual Networks behind your firewall) or we can create one.