7. (Optional) Check out PySpark streaming

If you have PySpark streaming enabled in your template, you can now test it out.

7.1. Check the streaming specification

In you IDE please open the file streaming.yaml in the root of your directory.

The file will look something like this:

streamingApplications:

- name: console

sparkSpec:

numberOfExecutors: 1

driverInstanceType: mx.micro

executorInstanceType: mx.micro

application: "local:///opt/spark/work-dir/src/$PROJECT_NAME/streaming_app.py"

applicationArgs:

- --env

- "{{ .Env }}"

As you can see, a streaming application called 'console' is defined.

It will run the code in the streaming_app.py file.

The configuration options of the streaming.yaml can be found in the docs on Spark Streaming.

7.2. Streaming UI

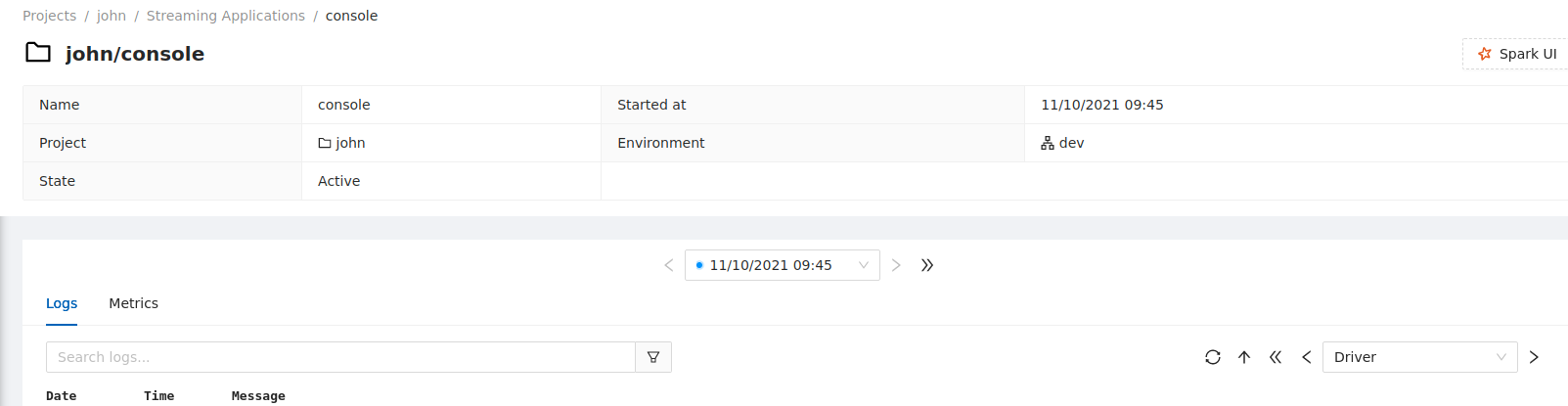

Since we deployed the project before, our Spark Streaming application should already be running. To verify that this is the case, you should open the Conveyor UI, navigate to your environment and press the streaming application tab.

You should see a streaming application for your project running. Clicking on the application will take you to the logs, you can also open the Spark UI from this view.