Publishing lineage to DataHub

Enabling DataHub lineage for all dags

Conveyor provides an integration with DataHub out of the box. To start integrating Airflow with your DataHub cluster, you have to do two things:

As of Airflow 3.0, we use the openlineage plugin instead of the DataHub plugin for pushing events to Datahub. We switched because OpenLineage is the standard for metadata and lineage collection, the plugin is more actively maintained, and it supports Airflow 3.

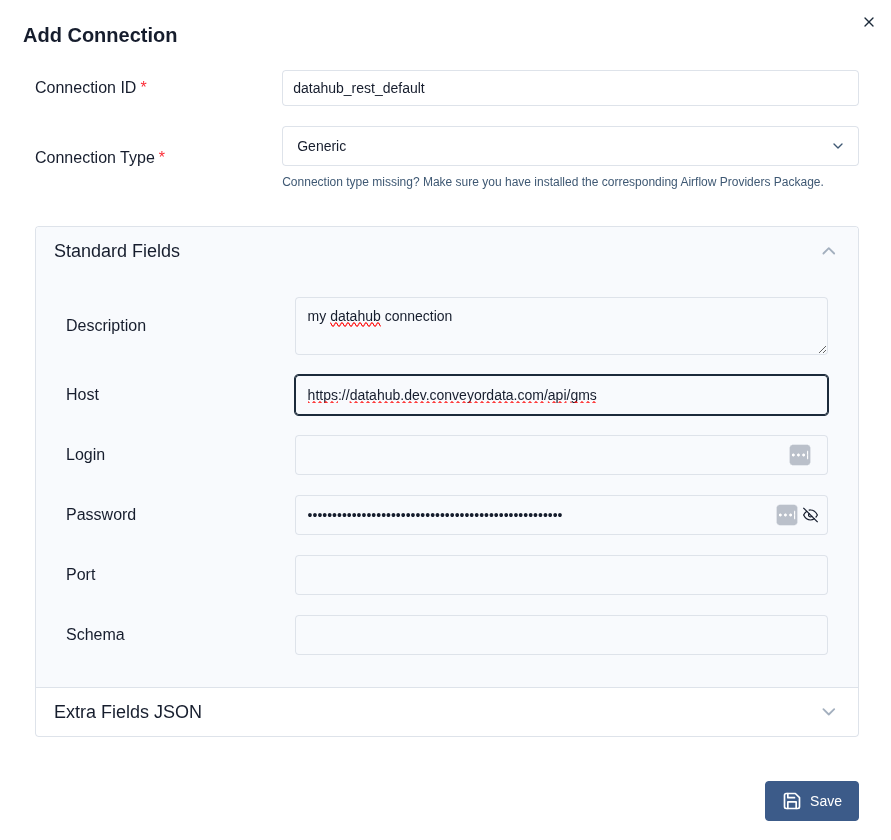

Creating the Airflow DataHub connection

You have to create a Generic connection.

The default name used for the connection is datahub_rest_default.

You can configure the connection in the Airflow UI by going to admin, connections and pressing the plus button.

Your configuration should look similar to the screenshot below. Your password should be a DataHub auth token.

Make sure that you configure the DataHub metadata service (also known as gms) as the server endpoint.

If you deploy DataHub using their helm chart, the gms backend can be reached as follows: https://<hostname>/api/gms

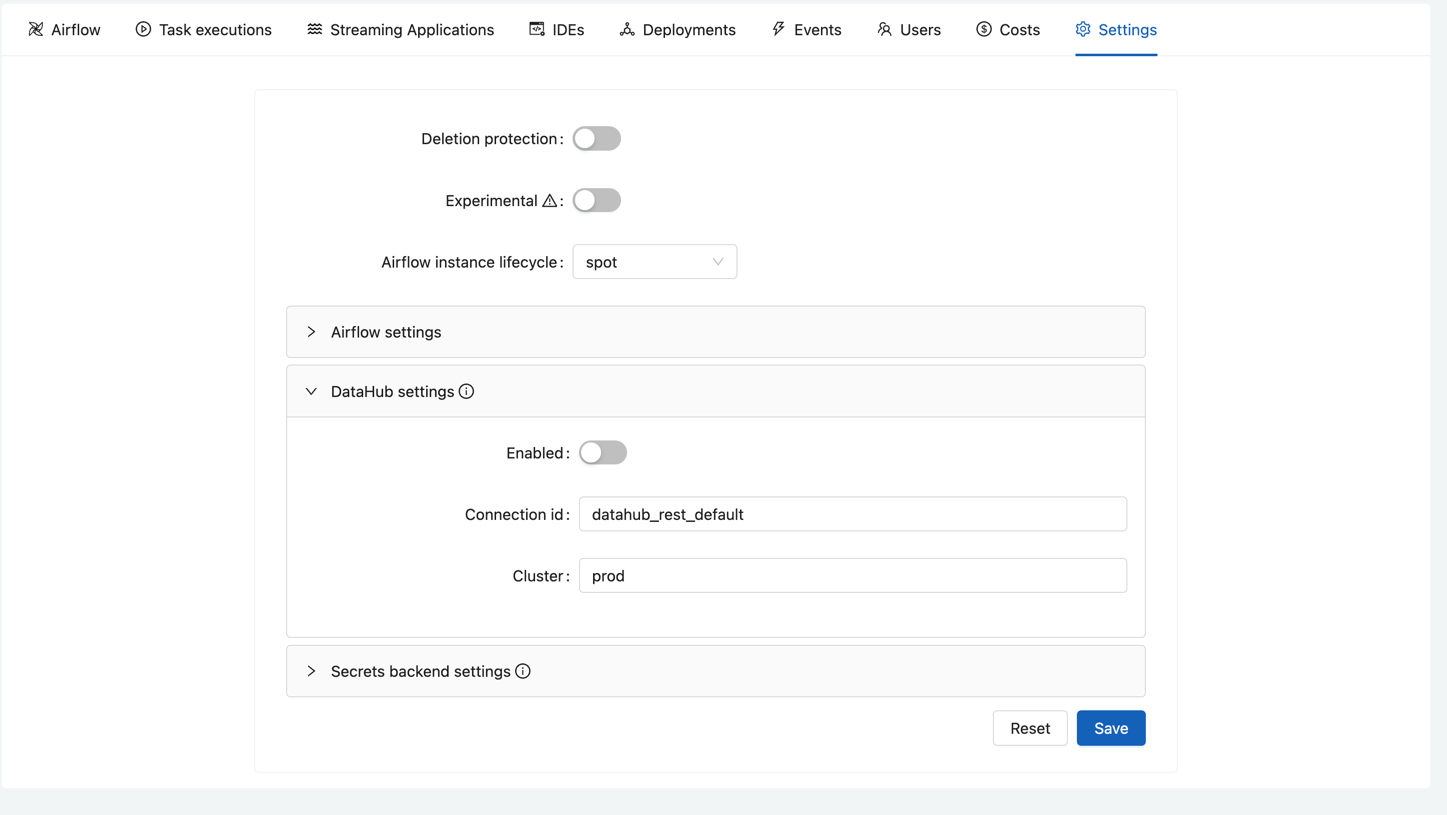

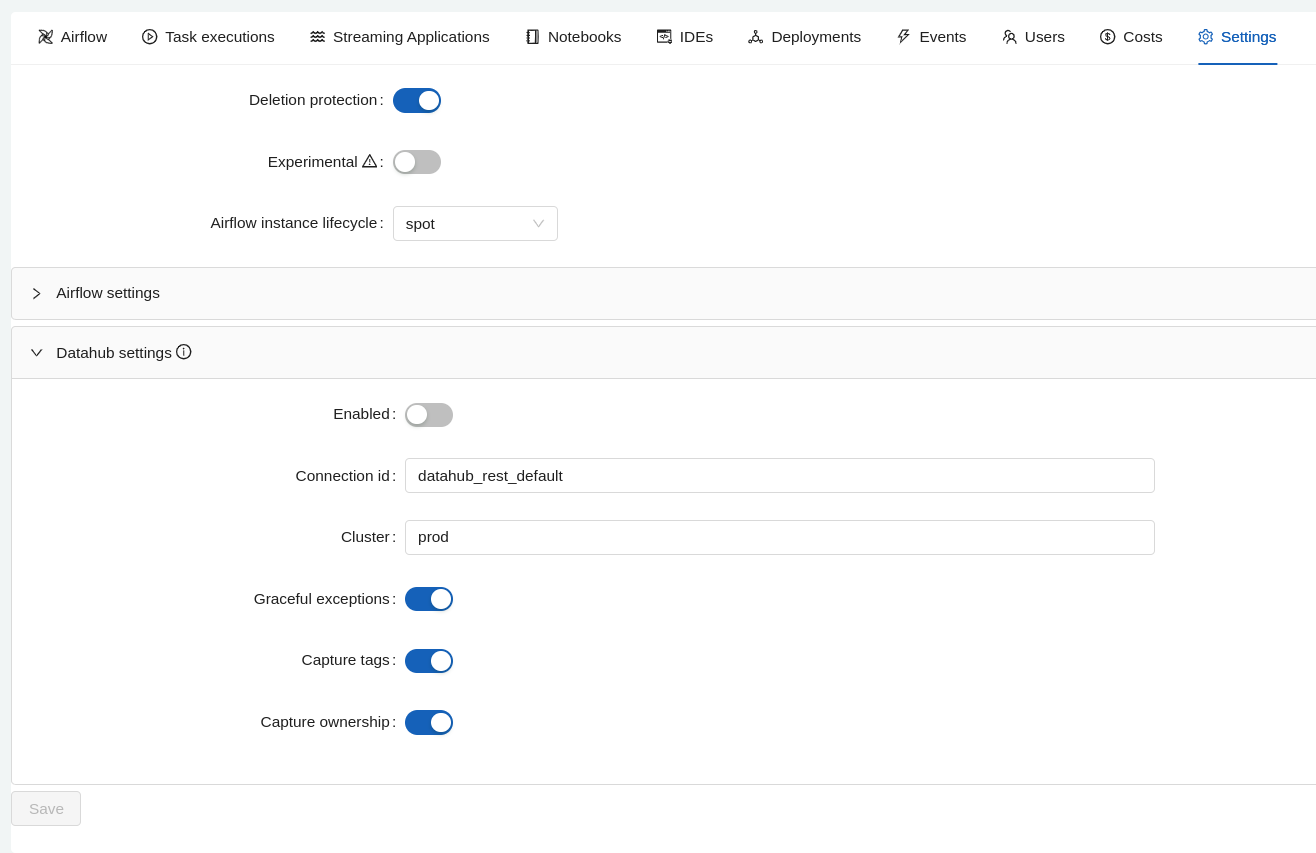

Enabling the DataHub integration

Once the connection is set up, you can activate the DataHub integration on an environment. You can either configure the DataHub integration using the Conveyor web app, CLI or Terraform.

Configuration options

These configuration options are only supported on Airflow 2.

When enabling DataHub integration, there exist 3 configuration options that can be enabled:

- capture ownership: extracts the owner from the dag configuration and captures it as a DataHub corpuser.

- capture tags: extracts the tags from the dag configuration and captures it as DataHub tags.

- graceful exceptions: if enabled the exception stacktrace will be suppressed and only the exception message will be visible in the logs.

UI

On the respective environment, click on the settings tab, and you will see the following screen:

- Airflow 3

- Airfow 2

Toggle the DataHub integration and change the settings where necessary. Finally, persist your changes by clicking on the save button.

CLI

Once the connection is set, you can enable the integration using the conveyor environment update command:

conveyor environment update --name ENV_NAME --airflow-datahub-integration-enabled=true --deletion-protection=false

After enabling the integration, every task running in that specific Airflow environment should automatically send updates to DataHub.

Terraform

A final way to configure DataHub integration is to use the conveyor_environment resource in Terraform.

For more details, have a look at the environment resource.

Dataset awareness

By default Airflow's built-in Assets configured in inlets and outlets are only visible in DataHub as a property on your task. To show datasets as first-class entities in DataHub, you have to use the OpenLineage Dataset class when defining your inlets and outlets.

- Airflow 3

- Airfow 2

Here is an example of how to define a dataset using OpenLineage combined with Airflow assets for data aware scheduling:

from openlineage.client.event_v2 import Dataset

from airflow.sdk.definitions.asset import Asset

ConveyorContainerOperatorV2(

...

dag=dag,

inlets=[

Dataset(namespace="s3://input-bucket", name="my-data/raw"),

],

outlets=[

Dataset(namespace="s3://input-bucket", name="my-data/normalized"),

Asset(uri="s3://input-bucket/my-data/normalized")

],

)

Here is an example of how to define a dataset using Datahub airflow 2 plugin and dataset aware scheduling:

from airflow.datasets import Dataset

from datahub_provider.entities import Dataset as DatahubDataset

ConveyorContainerOperatorV2(

...

dag=dag,

inlets=[

DatahubDataset("s3://input-bucket", "my-data/raw"),

],

outlets=[

Dataset(f"s3://input-bucket/my-data/normalized"),

DatahubDataset("s3://input-bucket", "my-data/normalized"),

],

)